This class represents the base interface for policies. More...

#include <AIToolbox/PolicyInterface.hpp>

Public Member Functions | |

| PolicyInterface (State s, Action a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| virtual Action | sampleAction (const Sampling &s) const =0 |

| This function chooses a random action for state s, following the policy distribution. More... | |

| virtual double | getActionProbability (const Sampling &s, const Action &a) const =0 |

| This function returns the probability of taking the specified action in the specified state. More... | |

| const State & | getS () const |

| This function returns the number of states of the world. More... | |

| const Action & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Protected Attributes | |

| State | S |

| Action | A |

| RandomEngine | rand_ |

Detailed Description

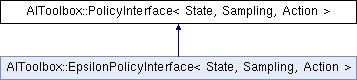

template<typename State, typename Sampling, typename Action>

class AIToolbox::PolicyInterface< State, Sampling, Action >

This class represents the base interface for policies.

This class represents an interface that all policies must conform to. The interface is generic as different methods may have very different ways to store and compute policies, and this interface simply asks for a way to sample them.

This class is templatized in order to work with as many problem classes as possible. It doesn't really implement any specific functionality, but should be used as a guide in order to decide which methods a new policy should have.

The State and Sampling parameters are separate since in POMDPs we still have "integer" states, but we often want to sample from beliefs. Having two separate parameters allows for this. In the MDP case they are both the same.

- Template Parameters

-

State This defines the type that is used to store the state space. Sampling This defines the type that is used to sample from the policy. Action This defines the type that is used to handle actions.

Constructor & Destructor Documentation

◆ PolicyInterface()

| AIToolbox::PolicyInterface< State, Sampling, Action >::PolicyInterface | ( | State | s, |

| Action | a | ||

| ) |

Basic constructor.

- Parameters

-

s The number of states of the world. a The number of actions available to the agent.

◆ ~PolicyInterface()

|

virtual |

Basic virtual destructor.

Member Function Documentation

◆ getA()

| const Action & AIToolbox::PolicyInterface< State, Sampling, Action >::getA |

This function returns the number of available actions to the agent.

- Returns

- The total number of actions.

◆ getActionProbability()

|

pure virtual |

This function returns the probability of taking the specified action in the specified state.

- Parameters

-

s The selected state. a The selected action.

- Returns

- The probability of taking the selected action in the specified state.

Implemented in AIToolbox::EpsilonPolicyInterface< void, void, size_t >, AIToolbox::EpsilonPolicyInterface< State, State, Action >, AIToolbox::EpsilonPolicyInterface< size_t, size_t, size_t >, AIToolbox::MDP::WoLFPolicy, AIToolbox::MDP::PGAAPPPolicy, AIToolbox::MDP::QSoftmaxPolicy, AIToolbox::MDP::PolicyWrapper, AIToolbox::MDP::QGreedyPolicy, AIToolbox::MDP::BanditPolicyAdaptor< BanditPolicy >, and AIToolbox::EpsilonPolicyInterface< State, Sampling, Action >.

◆ getS()

| const State & AIToolbox::PolicyInterface< State, Sampling, Action >::getS |

This function returns the number of states of the world.

- Returns

- The total number of states.

◆ sampleAction()

|

pure virtual |

This function chooses a random action for state s, following the policy distribution.

- Parameters

-

s The sampled state of the policy.

- Returns

- The chosen action.

Implemented in AIToolbox::EpsilonPolicyInterface< void, void, size_t >, AIToolbox::EpsilonPolicyInterface< State, State, Action >, AIToolbox::MDP::WoLFPolicy, AIToolbox::EpsilonPolicyInterface< size_t, size_t, size_t >, AIToolbox::MDP::PGAAPPPolicy, AIToolbox::MDP::QSoftmaxPolicy, AIToolbox::MDP::PolicyWrapper, AIToolbox::MDP::BanditPolicyAdaptor< BanditPolicy >, AIToolbox::MDP::QGreedyPolicy, and AIToolbox::EpsilonPolicyInterface< State, Sampling, Action >.

Member Data Documentation

◆ A

|

protected |

◆ rand_

|

mutableprotected |

◆ S

|

protected |

The documentation for this class was generated from the following file:

- include/AIToolbox/PolicyInterface.hpp