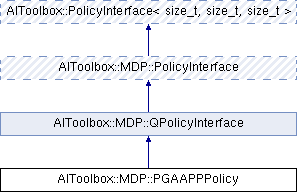

This class implements the PGA-APP learning algorithm. More...

#include <AIToolbox/MDP/Policies/PGAAPPPolicy.hpp>

Public Member Functions | |

| PGAAPPPolicy (const QFunction &q, double lRate=0.001, double predictionLength=3.0) | |

| Basic constructor. More... | |

| void | stepUpdateP (size_t s) |

| This function updates the policy based on changes in the QFunction. More... | |

| virtual size_t | sampleAction (const size_t &s) const override |

| This function chooses an action for state s, following the policy distribution. More... | |

| virtual double | getActionProbability (const size_t &s, const size_t &a) const override |

| This function returns the probability of taking the specified action in the specified state. More... | |

| virtual Matrix2D | getPolicy () const override |

| This function returns a matrix containing all probabilities of the policy. More... | |

| void | setLearningRate (double lRate) |

| This function sets the new learning rate. More... | |

| double | getLearningRate () const |

| This function returns the current learning rate. More... | |

| void | setPredictionLength (double pLength) |

| This function sets the new prediction length. More... | |

| double | getPredictionLength () const |

| This function returns the current prediction length. More... | |

Public Member Functions inherited from AIToolbox::MDP::QPolicyInterface Public Member Functions inherited from AIToolbox::MDP::QPolicyInterface | |

| QPolicyInterface (const QFunction &q) | |

| Basic constructor. More... | |

| const QFunction & | getQFunction () const |

| This function returns the underlying QFunction reference. More... | |

Public Member Functions inherited from AIToolbox::PolicyInterface< size_t, size_t, size_t > Public Member Functions inherited from AIToolbox::PolicyInterface< size_t, size_t, size_t > | |

| PolicyInterface (size_t s, size_t a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| const size_t & | getS () const |

| This function returns the number of states of the world. More... | |

| const size_t & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Additional Inherited Members | |

Public Types inherited from AIToolbox::MDP::PolicyInterface Public Types inherited from AIToolbox::MDP::PolicyInterface | |

| using | Base = AIToolbox::PolicyInterface< size_t, size_t, size_t > |

Protected Attributes inherited from AIToolbox::MDP::QPolicyInterface Protected Attributes inherited from AIToolbox::MDP::QPolicyInterface | |

| const QFunction & | q_ |

Protected Attributes inherited from AIToolbox::PolicyInterface< size_t, size_t, size_t > Protected Attributes inherited from AIToolbox::PolicyInterface< size_t, size_t, size_t > | |

| size_t | S |

| size_t | A |

| RandomEngine | rand_ |

Detailed Description

This class implements the PGA-APP learning algorithm.

This class implements the PGA-APP learning algorithm for stochastic games. The underlying idea is that rather than just modifying the policy over time following gradient descent, we can try to predict where the opponents' policies are going, and follow the gradient there. This should significantly speed up learning and convergence to a Nash equilibrium.

In the original paper, the QFunction was learning with QLearning before tuning the policy with PGA-APP, so you might want to do the same.

Constructor & Destructor Documentation

◆ PGAAPPPolicy()

| AIToolbox::MDP::PGAAPPPolicy::PGAAPPPolicy | ( | const QFunction & | q, |

| double | lRate = 0.001, |

||

| double | predictionLength = 3.0 |

||

| ) |

Basic constructor.

See the setter functions to see what the parameters do.

- Parameters

-

q The QFunction from which to extract policy updates. lRate The learning rate which gradually changes the policy. predictionLength How much further to predict opponent changes.

Member Function Documentation

◆ getActionProbability()

|

overridevirtual |

This function returns the probability of taking the specified action in the specified state.

- Parameters

-

s The selected state. a The selected action.

- Returns

- The probability of taking the selected action in the specified state.

Implements AIToolbox::PolicyInterface< size_t, size_t, size_t >.

◆ getLearningRate()

| double AIToolbox::MDP::PGAAPPPolicy::getLearningRate | ( | ) | const |

This function returns the current learning rate.

- Returns

- The current learning rate.

◆ getPolicy()

|

overridevirtual |

This function returns a matrix containing all probabilities of the policy.

Ideally this function can be called only when there is a repeated need to access the same policy values in an efficient manner.

Implements AIToolbox::MDP::PolicyInterface.

◆ getPredictionLength()

| double AIToolbox::MDP::PGAAPPPolicy::getPredictionLength | ( | ) | const |

This function returns the current prediction length.

- Returns

- The current prediction length.

◆ sampleAction()

|

overridevirtual |

This function chooses an action for state s, following the policy distribution.

Note that to improve learning it may be useful to wrap this policy into an EpsilonPolicy in order to provide some exploration.

- Parameters

-

s The sampled state of the policy.

- Returns

- The chosen action.

Implements AIToolbox::PolicyInterface< size_t, size_t, size_t >.

◆ setLearningRate()

| void AIToolbox::MDP::PGAAPPPolicy::setLearningRate | ( | double | lRate | ) |

This function sets the new learning rate.

Note: The learning rate must be >= 0.0.

- Parameters

-

lRate The new learning rate.

◆ setPredictionLength()

| void AIToolbox::MDP::PGAAPPPolicy::setPredictionLength | ( | double | pLength | ) |

This function sets the new prediction length.

Note: The prediction length must be >= 0.0.

- Parameters

-

pLength The new learning rate.

◆ stepUpdateP()

| void AIToolbox::MDP::PGAAPPPolicy::stepUpdateP | ( | size_t | s | ) |

This function updates the policy based on changes in the QFunction.

This function assumes that the QFunction has been altered from last time with the provided input state. It then uses these changes in order to update the policy.

- Parameters

-

s The state that needs to be updated.

The documentation for this class was generated from the following file:

- include/AIToolbox/MDP/Policies/PGAAPPPolicy.hpp