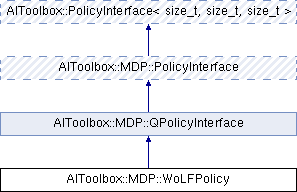

This class implements the WoLF learning algorithm. More...

#include <AIToolbox/MDP/Policies/WoLFPolicy.hpp>

Public Member Functions | |

| WoLFPolicy (const QFunction &q, double deltaw=0.0125, double deltal=0.05, double scaling=5000.0) | |

| Basic constructor. More... | |

| void | stepUpdateP (size_t s) |

| This function updates the WoLF policy based on changes in the QFunction. More... | |

| virtual size_t | sampleAction (const size_t &s) const override |

| This function chooses an action for state s, following the policy distribution. More... | |

| virtual double | getActionProbability (const size_t &s, const size_t &a) const override |

| This function returns the probability of taking the specified action in the specified state. More... | |

| virtual Matrix2D | getPolicy () const override |

| This function returns a matrix containing all probabilities of the policy. More... | |

| void | setDeltaW (double deltaW) |

| This function sets the new learning rate if winning. More... | |

| double | getDeltaW () const |

| This function returns the current learning rate during winning. More... | |

| void | setDeltaL (double deltaL) |

| This function sets the new learning rate if losing. More... | |

| double | getDeltaL () const |

| This function returns the current learning rate during loss. More... | |

| void | setScaling (double scaling) |

| This function modifies the scaling parameter. More... | |

| double | getScaling () const |

| This function returns the current scaling parameter. More... | |

Public Member Functions inherited from AIToolbox::MDP::QPolicyInterface Public Member Functions inherited from AIToolbox::MDP::QPolicyInterface | |

| QPolicyInterface (const QFunction &q) | |

| Basic constructor. More... | |

| const QFunction & | getQFunction () const |

| This function returns the underlying QFunction reference. More... | |

Public Member Functions inherited from AIToolbox::PolicyInterface< size_t, size_t, size_t > Public Member Functions inherited from AIToolbox::PolicyInterface< size_t, size_t, size_t > | |

| PolicyInterface (size_t s, size_t a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| const size_t & | getS () const |

| This function returns the number of states of the world. More... | |

| const size_t & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Additional Inherited Members | |

Public Types inherited from AIToolbox::MDP::PolicyInterface Public Types inherited from AIToolbox::MDP::PolicyInterface | |

| using | Base = AIToolbox::PolicyInterface< size_t, size_t, size_t > |

Protected Attributes inherited from AIToolbox::MDP::QPolicyInterface Protected Attributes inherited from AIToolbox::MDP::QPolicyInterface | |

| const QFunction & | q_ |

Protected Attributes inherited from AIToolbox::PolicyInterface< size_t, size_t, size_t > Protected Attributes inherited from AIToolbox::PolicyInterface< size_t, size_t, size_t > | |

| size_t | S |

| size_t | A |

| RandomEngine | rand_ |

Detailed Description

This class implements the WoLF learning algorithm.

What this algorithm does is it progressively modifies the policy given changes in the underlying QFunction. In particular, it modifies it rapidly if the agent is "losing" (getting less reward than expected), and more slowly when "winning", since there's little reason to change behaviour when things go right.

An advantage of this algorithm is that it can allow the policy to converge to non-deterministic solutions: for example two players trying to outmatch each other in rock-paper-scissor. At the same time, this particular version of the algorithm can take quite some time to converge to a good solution.

Constructor & Destructor Documentation

◆ WoLFPolicy()

| AIToolbox::MDP::WoLFPolicy::WoLFPolicy | ( | const QFunction & | q, |

| double | deltaw = 0.0125, |

||

| double | deltal = 0.05, |

||

| double | scaling = 5000.0 |

||

| ) |

Basic constructor.

See the setter functions to see what the parameters do.

- Parameters

-

q The QFunction from which to extract policy updates. deltaw The learning rate if this policy is currently winning. deltal The learning rate if this policy is currently losing. scaling The initial scaling rate to progressively reduce the learning rates.

Member Function Documentation

◆ getActionProbability()

|

overridevirtual |

This function returns the probability of taking the specified action in the specified state.

- Parameters

-

s The selected state. a The selected action.

- Returns

- The probability of taking the selected action in the specified state.

Implements AIToolbox::PolicyInterface< size_t, size_t, size_t >.

◆ getDeltaL()

| double AIToolbox::MDP::WoLFPolicy::getDeltaL | ( | ) | const |

This function returns the current learning rate during loss.

- Returns

- The learning rate during loss.

◆ getDeltaW()

| double AIToolbox::MDP::WoLFPolicy::getDeltaW | ( | ) | const |

This function returns the current learning rate during winning.

- Returns

- The learning rate during winning.

◆ getPolicy()

|

overridevirtual |

This function returns a matrix containing all probabilities of the policy.

Ideally this function can be called only when there is a repeated need to access the same policy values in an efficient manner.

Implements AIToolbox::MDP::PolicyInterface.

◆ getScaling()

| double AIToolbox::MDP::WoLFPolicy::getScaling | ( | ) | const |

This function returns the current scaling parameter.

- Returns

- The current scaling parameter.

◆ sampleAction()

|

overridevirtual |

This function chooses an action for state s, following the policy distribution.

Note that to improve learning it may be useful to wrap this policy into an EpsilonPolicy in order to provide some exploration.

- Parameters

-

s The sampled state of the policy.

- Returns

- The chosen action.

Implements AIToolbox::PolicyInterface< size_t, size_t, size_t >.

◆ setDeltaL()

| void AIToolbox::MDP::WoLFPolicy::setDeltaL | ( | double | deltaL | ) |

This function sets the new learning rate if losing.

This is the amount that the policy is modified when the updatePolicy() function is called when WoLFPolicy determines that it is currently losing based on the current QFunction.

- Parameters

-

deltaL The new learning rate during loss.

◆ setDeltaW()

| void AIToolbox::MDP::WoLFPolicy::setDeltaW | ( | double | deltaW | ) |

This function sets the new learning rate if winning.

This is the amount that the policy is modified when the updatePolicy() function is called when WoLFPolicy determines that it is currently winning based on the current QFunction.

- Parameters

-

deltaW The new learning rate during wins.

◆ setScaling()

| void AIToolbox::MDP::WoLFPolicy::setScaling | ( | double | scaling | ) |

This function modifies the scaling parameter.

In order to be able to converge WoLFPolicy needs to progressively reduce the learning rates over time. It does so automatically to avoid needing to call both learning rate setters constantly. This is also because in theory the learning rate should change per state, so it would be even harder to do outside.

Once determined if the policy is winning or losing, the selected learning rate is scaled with the following formula:

newLearningRate = originalLearningRate / ( c_[s] / scaling + 1 );

- Parameters

-

scaling The new scaling factor.

◆ stepUpdateP()

| void AIToolbox::MDP::WoLFPolicy::stepUpdateP | ( | size_t | s | ) |

This function updates the WoLF policy based on changes in the QFunction.

This function should be called between agent's actions, using the agent's current state.

- Parameters

-

s The state that needs to be updated.

The documentation for this class was generated from the following file:

- include/AIToolbox/MDP/Policies/WoLFPolicy.hpp