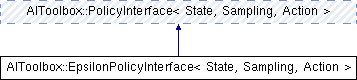

This class is a policy wrapper for epsilon action choice. More...

#include <AIToolbox/EpsilonPolicyInterface.hpp>

Public Types | |

| using | Base = PolicyInterface< State, Sampling, Action > |

Public Member Functions | |

| EpsilonPolicyInterface (const Base &p, double epsilon=0.1) | |

| Basic constructor. More... | |

| virtual Action | sampleAction (const Sampling &s) const override |

| This function chooses an action for state s, following the policy distribution and epsilon. More... | |

| virtual double | getActionProbability (const Sampling &s, const Action &a) const override |

| This function returns the probability of taking the specified action in the specified state. More... | |

| void | setEpsilon (double e) |

| This function sets the epsilon parameter. More... | |

| double | getEpsilon () const |

| This function will return the currently set epsilon parameter. More... | |

Public Member Functions inherited from AIToolbox::PolicyInterface< State, Sampling, Action > Public Member Functions inherited from AIToolbox::PolicyInterface< State, Sampling, Action > | |

| PolicyInterface (State s, Action a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| const State & | getS () const |

| This function returns the number of states of the world. More... | |

| const Action & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Protected Member Functions | |

| virtual Action | sampleRandomAction () const =0 |

| This function returns a random action in the Action space. More... | |

| virtual double | getRandomActionProbability () const =0 |

| This function returns the probability of picking a random action. More... | |

Protected Attributes | |

| const Base & | policy_ |

| double | epsilon_ |

Protected Attributes inherited from AIToolbox::PolicyInterface< State, Sampling, Action > Protected Attributes inherited from AIToolbox::PolicyInterface< State, Sampling, Action > | |

| State | S |

| Action | A |

| RandomEngine | rand_ |

Detailed Description

template<typename State, typename Sampling, typename Action>

class AIToolbox::EpsilonPolicyInterface< State, Sampling, Action >

This class is a policy wrapper for epsilon action choice.

This class is used to wrap already existing policies to implement automatic exploratory behaviour (e.g. epsilon-greedy policies).

An epsilon-greedy policy is a policy that takes a greedy action a certain percentage of the time (1-epsilon), and otherwise takes a random action. They are useful to force the agent to explore an unknown model, in order to gain new information to refine it and thus gain more reward.

Please note that to obtain an epsilon-greedy policy the wrapped policy needs to already be greedy with respect to the model.

- Template Parameters

-

State This defines the type that is used to store the state space. Sampling This defines the type that is used to sample from the policy. Action This defines the type that is used to handle actions.

Member Typedef Documentation

◆ Base

| using AIToolbox::EpsilonPolicyInterface< State, Sampling, Action >::Base = PolicyInterface<State, Sampling, Action> |

Constructor & Destructor Documentation

◆ EpsilonPolicyInterface()

| AIToolbox::EpsilonPolicyInterface< State, Sampling, Action >::EpsilonPolicyInterface | ( | const Base & | p, |

| double | epsilon = 0.1 |

||

| ) |

Basic constructor.

This constructor saves the input policy and the epsilon parameter for later use.

The epsilon parameter must be >= 0.0 and <= 1.0, otherwise the constructor will throw an std::invalid_argument.

- Parameters

-

p The policy that is being extended. epsilon The parameter that controls the amount of exploration.

Member Function Documentation

◆ getActionProbability()

|

overridevirtual |

This function returns the probability of taking the specified action in the specified state.

This function takes into account parameter epsilon while computing the final probability.

- Parameters

-

s The selected state. a The selected action.

- Returns

- The probability of taking the selected action in the specified state.

Implements AIToolbox::PolicyInterface< State, Sampling, Action >.

◆ getEpsilon()

| double AIToolbox::EpsilonPolicyInterface< State, Sampling, Action >::getEpsilon |

This function will return the currently set epsilon parameter.

- Returns

- The currently set epsilon parameter.

◆ getRandomActionProbability()

|

protectedpure virtual |

This function returns the probability of picking a random action.

This is simply one over the action space, but since the action space may not be a single number we leave to implementation to decide how to best compute this.

- Returns

- The probability of picking an an action at random.

◆ sampleAction()

|

overridevirtual |

This function chooses an action for state s, following the policy distribution and epsilon.

This function has a probability of epsilon of selecting a random action. Otherwise, it selects an action according to the distribution specified by the wrapped policy.

- Parameters

-

s The sampled state of the policy.

- Returns

- The chosen action.

Implements AIToolbox::PolicyInterface< State, Sampling, Action >.

◆ sampleRandomAction()

|

protectedpure virtual |

This function returns a random action in the Action space.

- Returns

- A valid random action.

◆ setEpsilon()

| void AIToolbox::EpsilonPolicyInterface< State, Sampling, Action >::setEpsilon | ( | double | e | ) |

This function sets the epsilon parameter.

The epsilon parameter determines the amount of exploration this policy will enforce when selecting actions. In particular actions are going to selected randomly with probability epsilon, and are going to be selected following the underlying policy with probability 1-epsilon.

The epsilon parameter must be >= 0.0 and <= 1.0, otherwise the function will do throw std::invalid_argument.

- Parameters

-

e The new epsilon parameter.

Member Data Documentation

◆ epsilon_

|

protected |

◆ policy_

|

protected |

The documentation for this class was generated from the following file:

- include/AIToolbox/EpsilonPolicyInterface.hpp