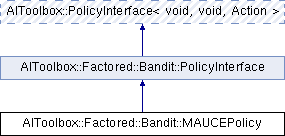

This class represents the Multi-Agent Upper Confidence Exploration algorithm. More...

#include <AIToolbox/Factored/Bandit/Policies/MAUCEPolicy.hpp>

Public Member Functions | |

| MAUCEPolicy (const Experience &exp, std::vector< double > ranges) | |

| Basic constructor. More... | |

| virtual Action | sampleAction () const override |

| This function selects an action using MAUCE. More... | |

| virtual double | getActionProbability (const Action &a) const override |

| This function returns the probability of taking the specified action. More... | |

| const Experience & | getExperience () const |

| This function returns the RollingAverage learned from the data. More... | |

Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, Action > Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, Action > | |

| PolicyInterface (Action a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| virtual double | getActionProbability (const Action &a) const =0 |

| This function returns the probability of taking the specified action. More... | |

| const Action & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Additional Inherited Members | |

Public Types inherited from AIToolbox::Factored::Bandit::PolicyInterface Public Types inherited from AIToolbox::Factored::Bandit::PolicyInterface | |

| using | Base = AIToolbox::PolicyInterface< void, void, Action > |

Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, Action > Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, Action > | |

| Action | A |

| RandomEngine | rand_ |

Detailed Description

This class represents the Multi-Agent Upper Confidence Exploration algorithm.

This algorithm is similar in spirit to LLR, but it performs a much more sophisticated variable elimination step that includes branch-and-bound.

It does this by knowing, via its parameters, the maximum reward range for each group of interdependent agents (max possible reward minus min possible reward). This allows it to estimate the uncertainty around any given joint action, by keeping track for each PartialAction its upper and lower bounds.

During the VariableElimination step (done with UCVE), the uncertainties are tracked during the cross-sums, which allows pruning actions that are known to be suboptimal.

Constructor & Destructor Documentation

◆ MAUCEPolicy()

| AIToolbox::Factored::Bandit::MAUCEPolicy::MAUCEPolicy | ( | const Experience & | exp, |

| std::vector< double > | ranges | ||

| ) |

Basic constructor.

This constructor needs to know in advance the groups of agents that need to collaboratively cooperate in order to reach their goal. This is converted in a simple Q-Function containing the learned averages for those groups.

Note: there can be multiple groups with the same keys (to exploit structure of multiple reward functions between the same agents), but each PartialKeys must be sorted!

- Parameters

-

exp The Experience we learn from. ranges The ranges for each local group.

Member Function Documentation

◆ getActionProbability()

|

overridevirtual |

This function returns the probability of taking the specified action.

As sampleAction() is deterministic, we simply run it to check that the Action it returns is equal to the one passed as input.

- Parameters

-

a The selected action.

- Returns

- This function returns an approximation of the probability of choosing the input action.

◆ getExperience()

| const Experience& AIToolbox::Factored::Bandit::MAUCEPolicy::getExperience | ( | ) | const |

This function returns the RollingAverage learned from the data.

These rules skip the exploration part, to allow the creation of a policy using the learned QFunction (since otherwise this algorithm would forever explore).

- Returns

- The RollingAverage containing all statistics from the input data.

◆ sampleAction()

|

overridevirtual |

This function selects an action using MAUCE.

We construct an UCVE process, which is able to compute the Action that maximizes the correct overall UCB exploration bonus.

UCVE is however a somewhat complex and slow algorithm; for a faster alternative you can look into ThompsonSamplingPolicy.

- See also

- ThompsonSamplingPolicy

- Returns

- The new optimal action to be taken at the next timestep.

Implements AIToolbox::PolicyInterface< void, void, Action >.

The documentation for this class was generated from the following file:

- include/AIToolbox/Factored/Bandit/Policies/MAUCEPolicy.hpp