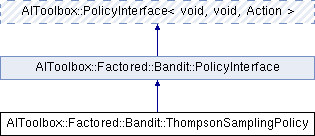

This class implements a Thompson sampling policy. More...

#include <AIToolbox/Factored/Bandit/Policies/ThompsonSamplingPolicy.hpp>

Public Member Functions | |

| ThompsonSamplingPolicy (const Experience &exp) | |

| Basic constructor. More... | |

| virtual Action | sampleAction () const override |

| This function chooses an action using Thompson sampling. More... | |

| virtual double | getActionProbability (const Action &a) const override |

| This function returns the probability of taking the specified action. More... | |

| const Experience & | getExperience () const |

| This function returns a reference to the underlying Experience we use. More... | |

Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, Action > Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, Action > | |

| PolicyInterface (Action a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| virtual double | getActionProbability (const Action &a) const =0 |

| This function returns the probability of taking the specified action. More... | |

| const Action & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Static Public Member Functions | |

| static void | setupGraph (const Experience &exp, VariableElimination::GVE::Graph &graph, RandomEngine &rnd) |

| This function constructs a graph by sampling the provided experience. More... | |

Additional Inherited Members | |

Public Types inherited from AIToolbox::Factored::Bandit::PolicyInterface Public Types inherited from AIToolbox::Factored::Bandit::PolicyInterface | |

| using | Base = AIToolbox::PolicyInterface< void, void, Action > |

Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, Action > Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, Action > | |

| Action | A |

| RandomEngine | rand_ |

Detailed Description

This class implements a Thompson sampling policy.

This class uses the Normal distribution in order to estimate its certainty about each arm average reward. Thus, each arm is estimated through a Normal distribution centered on the average for the arm, with decreasing variance as more experience is gathered.

Note that this class assumes that the reward obtained is normalized into a [0,1] range (which it does not check).

The usage of the Normal distribution best matches a Normally distributed reward. Another implementation (not provided here) uses Beta distributions to handle Bernoulli distributed rewards.

Constructor & Destructor Documentation

◆ ThompsonSamplingPolicy()

| AIToolbox::Factored::Bandit::ThompsonSamplingPolicy::ThompsonSamplingPolicy | ( | const Experience & | exp | ) |

Basic constructor.

- Parameters

-

exp The Experience we learn from.

Member Function Documentation

◆ getActionProbability()

|

overridevirtual |

This function returns the probability of taking the specified action.

WARNING: In this class the only way to compute the true probability of selecting the input action is via numerical integration, since we're dealing with |A| Normal random variables. To avoid having to do this, we simply sample a lot and return an approximation of the times the input action was actually selected. This makes this function very very SLOW. Do not call at will!!

To keep things short, we call "sampleAction" 1000 times and count how many times the provided input was sampled. This requires performing 1000 VariableElimination runs.

- Parameters

-

a The selected action.

- Returns

- This function returns an approximation of the probability of choosing the input action.

◆ getExperience()

| const Experience& AIToolbox::Factored::Bandit::ThompsonSamplingPolicy::getExperience | ( | ) | const |

This function returns a reference to the underlying Experience we use.

- Returns

- The internal Experience reference.

◆ sampleAction()

|

overridevirtual |

This function chooses an action using Thompson sampling.

For each possible local joint action, we sample its possible value from a normal distribution with mean equal to its reported Q-value and standard deviation equal to 1.0/(counts+1).

We then perform VariableElimination on the produced rules to select the optimal action to take.

- Returns

- The chosen action.

Implements AIToolbox::PolicyInterface< void, void, Action >.

◆ setupGraph()

|

static |

This function constructs a graph by sampling the provided experience.

This function is the core of ThompsonSamplingPolicy, and is provided so that other methods can leverage Thompson sampling in a simpler way.

Given a newly built, empty graph, we sample the experience using Student t-distribution, so that the values sampled for each local joint action have the correct likelihood of being the true ones, following the Bayesian posteriors.

- Parameters

-

exp The experience data we need to use. graph The output, constructed graph. rnd The random engine needed to sample.

The documentation for this class was generated from the following file:

- include/AIToolbox/Factored/Bandit/Policies/ThompsonSamplingPolicy.hpp