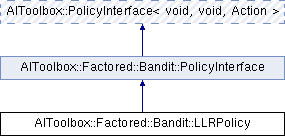

This class represents the Learning with Linear Rewards algorithm. More...

#include <AIToolbox/Factored/Bandit/Policies/LLRPolicy.hpp>

Public Member Functions | |

| LLRPolicy (const Experience &exp) | |

| Basic constructor. More... | |

| virtual Action | sampleAction () const override |

| This function selects an action using LLR. More... | |

| virtual double | getActionProbability (const Action &a) const override |

| This function returns the probability of taking the specified action. More... | |

| const Experience & | getExperience () const |

| This function returns the Experience we use to learn. More... | |

Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, Action > Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, Action > | |

| PolicyInterface (Action a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| virtual double | getActionProbability (const Action &a) const =0 |

| This function returns the probability of taking the specified action. More... | |

| const Action & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Additional Inherited Members | |

Public Types inherited from AIToolbox::Factored::Bandit::PolicyInterface Public Types inherited from AIToolbox::Factored::Bandit::PolicyInterface | |

| using | Base = AIToolbox::PolicyInterface< void, void, Action > |

Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, Action > Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, Action > | |

| Action | A |

| RandomEngine | rand_ |

Detailed Description

This class represents the Learning with Linear Rewards algorithm.

The LLR algorithm is used on multi-armed bandits, where multiple actions can be taken at the same time.

This algorithm, as described in the paper, is extremely flexible as it both allows multiple actions to be taken at each timestep, while also leaving space for any algorithm which is able to solve the action maximization selection problem. This is possible since the action space can be arbitrarily restricted.

This means that creating an actual generic algorithm out of the paper is pretty hard as it would have to be able to be passed any algorithm and use it. We chose not to do it here.

Here we implement a simple version where a single, factored action is allowed, and we use VE to solve the action selection problem. This pretty much results in simply solving VE with UCB1 weights, together with some learning.

Constructor & Destructor Documentation

◆ LLRPolicy()

| AIToolbox::Factored::Bandit::LLRPolicy::LLRPolicy | ( | const Experience & | exp | ) |

Basic constructor.

- Parameters

-

exp The Experience we learn from.

Member Function Documentation

◆ getActionProbability()

|

overridevirtual |

This function returns the probability of taking the specified action.

As sampleAction() is deterministic, we simply run it to check that the Action it returns is equal to the one passed as input.

- Parameters

-

a The selected action.

- Returns

- This function returns an approximation of the probability of choosing the input action.

◆ getExperience()

| const Experience& AIToolbox::Factored::Bandit::LLRPolicy::getExperience | ( | ) | const |

This function returns the Experience we use to learn.

- Returns

- The underlying Experience.

◆ sampleAction()

|

overridevirtual |

This function selects an action using LLR.

We construct a VE process, where for each entry we compute independently its exploration bonus. This is imprecise because we end up overestimating the bonus and over-exploring.

For improved alternatives, look at MAUCE or ThompsonSamplingPolicy.

- See also

- MAUCE

- ThompsonSamplingPolicy

- Returns

- The optimal action to take at the next timestep.

Implements AIToolbox::PolicyInterface< void, void, Action >.

The documentation for this class was generated from the following file:

- include/AIToolbox/Factored/Bandit/Policies/LLRPolicy.hpp