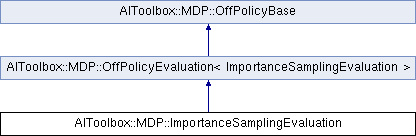

This class implements off-policy evaluation via importance sampling. More...

#include <AIToolbox/MDP/Algorithms/ImportanceSampling.hpp>

Public Types | |

| using | Parent = OffPolicyEvaluation< ImportanceSamplingEvaluation > |

Public Types inherited from AIToolbox::MDP::OffPolicyEvaluation< ImportanceSamplingEvaluation > Public Types inherited from AIToolbox::MDP::OffPolicyEvaluation< ImportanceSamplingEvaluation > | |

| using | Parent = OffPolicyBase |

Public Types inherited from AIToolbox::MDP::OffPolicyBase Public Types inherited from AIToolbox::MDP::OffPolicyBase | |

| using | Trace = std::tuple< size_t, size_t, double > |

| using | Traces = std::vector< Trace > |

Public Member Functions | |

| ImportanceSamplingEvaluation (const PolicyInterface &target, const PolicyInterface &behaviour, const double discount, const double alpha, const double tolerance) | |

| Basic constructor. More... | |

Public Member Functions inherited from AIToolbox::MDP::OffPolicyEvaluation< ImportanceSamplingEvaluation > Public Member Functions inherited from AIToolbox::MDP::OffPolicyEvaluation< ImportanceSamplingEvaluation > | |

| OffPolicyEvaluation (const PolicyInterface &target, double discount=1.0, double alpha=0.1, double tolerance=0.001) | |

| Basic constructor. More... | |

| void | stepUpdateQ (const size_t s, const size_t a, const size_t s1, const double rew) |

| This function updates the internal QFunction using the discount set during construction. More... | |

Public Member Functions inherited from AIToolbox::MDP::OffPolicyBase Public Member Functions inherited from AIToolbox::MDP::OffPolicyBase | |

| OffPolicyBase (size_t s, size_t a, double discount=1.0, double alpha=0.1, double tolerance=0.001) | |

| Basic construtor. More... | |

| void | setLearningRate (double a) |

| This function sets the learning rate parameter. More... | |

| double | getLearningRate () const |

| This function will return the current set learning rate parameter. More... | |

| void | setDiscount (double d) |

| This function sets the new discount parameter. More... | |

| double | getDiscount () const |

| This function returns the currently set discount parameter. More... | |

| void | setTolerance (double t) |

| This function sets the trace cutoff parameter. More... | |

| double | getTolerance () const |

| This function returns the currently set trace cutoff parameter. More... | |

| void | clearTraces () |

| This function clears the already set traces. More... | |

| const Traces & | getTraces () const |

| This function returns the currently set traces. More... | |

| void | setTraces (const Traces &t) |

| This function sets the currently set traces. More... | |

| size_t | getS () const |

| This function returns the number of states on which QLearning is working. More... | |

| size_t | getA () const |

| This function returns the number of actions on which QLearning is working. More... | |

| const QFunction & | getQFunction () const |

| This function returns a reference to the internal QFunction. More... | |

| void | setQFunction (const QFunction &qfun) |

| This function allows to directly set the internal QFunction. More... | |

Additional Inherited Members | |

Protected Member Functions inherited from AIToolbox::MDP::OffPolicyBase Protected Member Functions inherited from AIToolbox::MDP::OffPolicyBase | |

| void | updateTraces (size_t s, size_t a, double error, double traceDiscount) |

| This function updates the traces using the input data. More... | |

Protected Attributes inherited from AIToolbox::MDP::OffPolicyEvaluation< ImportanceSamplingEvaluation > Protected Attributes inherited from AIToolbox::MDP::OffPolicyEvaluation< ImportanceSamplingEvaluation > | |

| const PolicyInterface & | target_ |

Protected Attributes inherited from AIToolbox::MDP::OffPolicyBase Protected Attributes inherited from AIToolbox::MDP::OffPolicyBase | |

| size_t | S |

| size_t | A |

| double | discount_ |

| double | alpha_ |

| double | tolerance_ |

| QFunction | q_ |

| Traces | traces_ |

Detailed Description

This class implements off-policy evaluation via importance sampling.

This off policy algorithm weights the traces based on the ratio of the likelyhood of the target policy vs the behaviour policy.

The idea behind this is that if an action is very unlikely to be taken by the behaviour with respect to the target, then we should count it more, as if to "simulate" the returns we'd get when acting with the target policy.

On the other side, if an action is very likely to be taken by the behaviour policy with respect to the target, we're going to count it less, as we're probably going to see this action picked a lot more times than what we'd have done with the target.

While this method is correct in theory, in practice it suffers from an incredibly high, if possibly infinite, variance. What happens is if you get a sequence of lucky (or unlucky) action choices, the traces get either cut or, even worse, get incredibly high valued, which skews the results quite a lot.

Member Typedef Documentation

◆ Parent

| using AIToolbox::MDP::ImportanceSamplingEvaluation::Parent = OffPolicyEvaluation<ImportanceSamplingEvaluation> |

Constructor & Destructor Documentation

◆ ImportanceSamplingEvaluation()

|

inline |

Basic constructor.

- Parameters

-

target Target policy. behaviour Behaviour policy discount Discount for the problem. alpha Learning rate parameter. tolerance Trace cutoff parameter.

The documentation for this class was generated from the following file:

- include/AIToolbox/MDP/Algorithms/ImportanceSampling.hpp