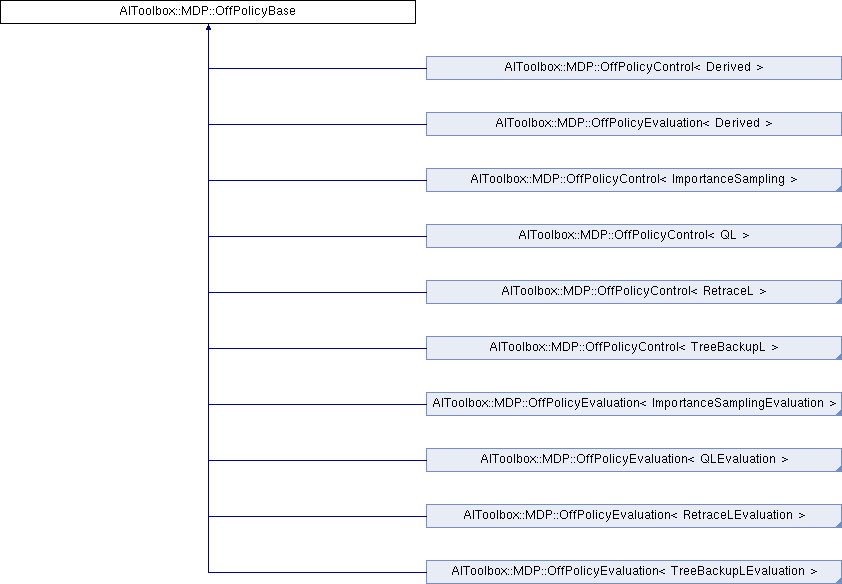

This class contains all the boilerplates for off-policy methods.

More...

#include <AIToolbox/MDP/Algorithms/Utils/OffPolicyTemplate.hpp>

|

| using | Trace = std::tuple< size_t, size_t, double > |

| |

| using | Traces = std::vector< Trace > |

| |

|

| void | updateTraces (size_t s, size_t a, double error, double traceDiscount) |

| | This function updates the traces using the input data. More...

|

| |

This class contains all the boilerplates for off-policy methods.

◆ Trace

◆ Traces

◆ OffPolicyBase()

| AIToolbox::MDP::OffPolicyBase::OffPolicyBase |

( |

size_t |

s, |

|

|

size_t |

a, |

|

|

double |

discount = 1.0, |

|

|

double |

alpha = 0.1, |

|

|

double |

tolerance = 0.001 |

|

) |

| |

Basic construtor.

- Parameters

-

| s | The size of the state space. |

| a | The size of the action space. |

| discount | The discount of the environment. |

| alpha | The learning rate. |

| tolerance | The cutoff point for eligibility traces. |

◆ clearTraces()

| void AIToolbox::MDP::OffPolicyBase::clearTraces |

( |

| ) |

|

This function clears the already set traces.

◆ getA()

| size_t AIToolbox::MDP::OffPolicyBase::getA |

( |

| ) |

const |

This function returns the number of actions on which QLearning is working.

- Returns

- The number of actions.

◆ getDiscount()

| double AIToolbox::MDP::OffPolicyBase::getDiscount |

( |

| ) |

const |

This function returns the currently set discount parameter.

- Returns

- The currently set discount parameter.

◆ getLearningRate()

| double AIToolbox::MDP::OffPolicyBase::getLearningRate |

( |

| ) |

const |

This function will return the current set learning rate parameter.

- Returns

- The currently set learning rate parameter.

◆ getQFunction()

| const QFunction& AIToolbox::MDP::OffPolicyBase::getQFunction |

( |

| ) |

const |

This function returns a reference to the internal QFunction.

The returned reference can be used to build Policies, for example MDP::QGreedyPolicy.

- Returns

- The internal QFunction.

◆ getS()

| size_t AIToolbox::MDP::OffPolicyBase::getS |

( |

| ) |

const |

This function returns the number of states on which QLearning is working.

- Returns

- The number of states.

◆ getTolerance()

| double AIToolbox::MDP::OffPolicyBase::getTolerance |

( |

| ) |

const |

This function returns the currently set trace cutoff parameter.

- Returns

- The currently set trace cutoff parameter.

◆ getTraces()

| const Traces& AIToolbox::MDP::OffPolicyBase::getTraces |

( |

| ) |

const |

This function returns the currently set traces.

- Returns

- The currently set traces.

◆ setDiscount()

| void AIToolbox::MDP::OffPolicyBase::setDiscount |

( |

double |

d | ) |

|

This function sets the new discount parameter.

The discount parameter controls how much we care about future rewards. If 1, then any reward is the same, if obtained now or in a million timesteps. Thus the algorithm will optimize overall reward accretion. When less than 1, rewards obtained in the presents are valued more than future rewards.

- Parameters

-

| d | The new discount factor. |

◆ setLearningRate()

| void AIToolbox::MDP::OffPolicyBase::setLearningRate |

( |

double |

a | ) |

|

This function sets the learning rate parameter.

The learning parameter determines the speed at which the QFunction is modified with respect to new data. In fully deterministic environments (such as an agent moving through a grid, for example), this parameter can be safely set to 1.0 for maximum learning.

On the other side, in stochastic environments, in order to converge this parameter should be higher when first starting to learn, and decrease slowly over time.

Otherwise it can be kept somewhat high if the environment dynamics change progressively, and the algorithm will adapt accordingly. The final behaviour is very dependent on this parameter.

The learning rate parameter must be > 0.0 and <= 1.0, otherwise the function will throw an std::invalid_argument.

- Parameters

-

| a | The new learning rate parameter. |

◆ setQFunction()

| void AIToolbox::MDP::OffPolicyBase::setQFunction |

( |

const QFunction & |

qfun | ) |

|

This function allows to directly set the internal QFunction.

This can be useful in order to use a QFunction that has already been computed elsewhere.

- Parameters

-

| qfun | The new QFunction to set. |

◆ setTolerance()

| void AIToolbox::MDP::OffPolicyBase::setTolerance |

( |

double |

t | ) |

|

This function sets the trace cutoff parameter.

This parameter determines when a trace is removed, as its coefficient has become too small to bother updating its value.

- Parameters

-

| t | The new trace cutoff value. |

◆ setTraces()

| void AIToolbox::MDP::OffPolicyBase::setTraces |

( |

const Traces & |

t | ) |

|

This function sets the currently set traces.

This method is provided in case you have a need to tinker with the internal traces. You generally don't unless you are building on top of this class in order to do something more complicated.

- Parameters

-

| t | The currently set traces. |

◆ updateTraces()

| void AIToolbox::MDP::OffPolicyBase::updateTraces |

( |

size_t |

s, |

|

|

size_t |

a, |

|

|

double |

error, |

|

|

double |

traceDiscount |

|

) |

| |

|

protected |

This function updates the traces using the input data.

This operation is basically identical to what SARSAL does.

- See also

- SARSAL::stepUpdateQ

- Parameters

-

| s | The state we were before. |

| a | The action we did. |

| error | The error used to update the QFunction. |

| traceDiscount | The discount for all traces in memory. |

| size_t AIToolbox::MDP::OffPolicyBase::A |

|

protected |

◆ alpha_

| double AIToolbox::MDP::OffPolicyBase::alpha_ |

|

protected |

◆ discount_

| double AIToolbox::MDP::OffPolicyBase::discount_ |

|

protected |

◆ q_

| size_t AIToolbox::MDP::OffPolicyBase::S |

|

protected |

◆ tolerance_

| double AIToolbox::MDP::OffPolicyBase::tolerance_ |

|

protected |

◆ traces_

| Traces AIToolbox::MDP::OffPolicyBase::traces_ |

|

protected |

The documentation for this class was generated from the following file: