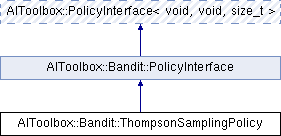

This class implements a Thompson sampling policy. More...

#include <AIToolbox/Bandit/Policies/ThompsonSamplingPolicy.hpp>

Public Member Functions | |

| ThompsonSamplingPolicy (const Experience &exp) | |

| Basic constructor. More... | |

| virtual size_t | sampleAction () const override |

| This function chooses an action using Thompson sampling. More... | |

| virtual double | getActionProbability (const size_t &a) const override |

| This function returns the probability of taking the specified action. More... | |

| virtual Vector | getPolicy () const override |

| This function returns a vector containing all probabilities of the policy. More... | |

| const Experience & | getExperience () const |

| This function returns a reference to the underlying Experience we use. More... | |

Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, size_t > Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, size_t > | |

| PolicyInterface (void s, size_t a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| virtual size_t | sampleAction (const void &s) const=0 |

| This function chooses a random action for state s, following the policy distribution. More... | |

| virtual double | getActionProbability (const void &s, const size_t &a) const=0 |

| This function returns the probability of taking the specified action in the specified state. More... | |

| const void & | getS () const |

| This function returns the number of states of the world. More... | |

| const size_t & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Additional Inherited Members | |

Public Types inherited from AIToolbox::Bandit::PolicyInterface Public Types inherited from AIToolbox::Bandit::PolicyInterface | |

| using | Base = AIToolbox::PolicyInterface< void, void, size_t > |

Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, size_t > Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, size_t > | |

| void | S |

| size_t | A |

| RandomEngine | rand_ |

Detailed Description

This class implements a Thompson sampling policy.

This class uses the Student-t distribution to model normally-distributed rewards with unknown mean and variance. As more experience is gained, each distribution becomes a Normal which models the mean of its respective arm.

Constructor & Destructor Documentation

◆ ThompsonSamplingPolicy()

| AIToolbox::Bandit::ThompsonSamplingPolicy::ThompsonSamplingPolicy | ( | const Experience & | exp | ) |

Basic constructor.

- Parameters

-

exp The Experience we learn from.

Member Function Documentation

◆ getActionProbability()

|

overridevirtual |

This function returns the probability of taking the specified action.

WARNING: In this class the only way to compute the true probability of selecting the input action is via numerical integration, since we're dealing with |A| Normal random variables. To avoid having to do this, we simply sample a lot and return an approximation of the times the input action was actually selected. This makes this function very very SLOW. Do not call at will!!

- Parameters

-

a The selected action.

- Returns

- This function returns an approximation of the probability of choosing the input action.

◆ getExperience()

| const Experience& AIToolbox::Bandit::ThompsonSamplingPolicy::getExperience | ( | ) | const |

This function returns a reference to the underlying Experience we use.

- Returns

- The internal Experience reference.

◆ getPolicy()

|

overridevirtual |

This function returns a vector containing all probabilities of the policy.

Ideally this function can be called only when there is a repeated need to access the same policy values in an efficient manner.

WARNING: This can be really expensive, as it does pretty much the same work as getActionProbability(). It shouldn't be slower than that call though, so if you do need the overall policy, do call this method.

Implements AIToolbox::Bandit::PolicyInterface.

◆ sampleAction()

|

overridevirtual |

This function chooses an action using Thompson sampling.

- Returns

- The chosen action.

The documentation for this class was generated from the following file:

- include/AIToolbox/Bandit/Policies/ThompsonSamplingPolicy.hpp