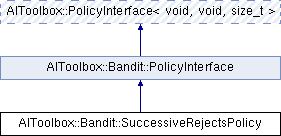

This class implements the successive rejects algorithm. More...

#include <AIToolbox/Bandit/Policies/SuccessiveRejectsPolicy.hpp>

Public Member Functions | |

| SuccessiveRejectsPolicy (const Experience &experience, unsigned budget) | |

| Basic constructor. More... | |

| virtual size_t | sampleAction () const override |

| This function selects the current action to explore. More... | |

| void | stepUpdateQ () |

| This function updates the current phase, nK_, and prunes actions from the pool. More... | |

| bool | canRecommendAction () const |

| This function returns whether a single action remains in the pool. More... | |

| size_t | recommendAction () const |

| If the pool has a single element, this function returns the best estimated action after the SR exploration process. More... | |

| size_t | getCurrentPhase () const |

| This function returns the current phase. More... | |

| size_t | getCurrentNk () const |

| This function returns the nK_ for the current phase. More... | |

| size_t | getPreviousNk () const |

| This function returns the nK_ for the previous phase. More... | |

| virtual double | getActionProbability (const size_t &a) const override |

| This function is fairly useless for SR, but it returns either 1.0 or 0.0 depending on which action is currently scheduled to be pulled. More... | |

| virtual Vector | getPolicy () const override |

| This function probably should not be called, but otherwise is what you would expect given the current timestep. More... | |

| const Experience & | getExperience () const |

| This function returns a reference to the underlying Experience we use. More... | |

Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, size_t > Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, size_t > | |

| PolicyInterface (void s, size_t a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| virtual size_t | sampleAction (const void &s) const=0 |

| This function chooses a random action for state s, following the policy distribution. More... | |

| virtual double | getActionProbability (const void &s, const size_t &a) const=0 |

| This function returns the probability of taking the specified action in the specified state. More... | |

| const void & | getS () const |

| This function returns the number of states of the world. More... | |

| const size_t & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Additional Inherited Members | |

Public Types inherited from AIToolbox::Bandit::PolicyInterface Public Types inherited from AIToolbox::Bandit::PolicyInterface | |

| using | Base = AIToolbox::PolicyInterface< void, void, size_t > |

Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, size_t > Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, size_t > | |

| void | S |

| size_t | A |

| RandomEngine | rand_ |

Detailed Description

This class implements the successive rejects algorithm.

The successive rejects (SR) algorithm is a budget-based pure exploration algorithm. Its goal is to simply recommend the best possible action after its budget of pulls has been exhausted. The reward accumulated during the exploration phase is irrelevant to the algorithm itself, which is only focused on optimizing the quality of the final recommendation.

The way SR works is to split the available budget into phases. During each phase, each arm is pulled a certain (nKNew_ - nKOld_) number of times, which depends on the current phase. After these pulls, the arm with the lowest empirical mean is removed from the pool of arms to be evaluated.

The algorithm keeps removing arms from the pool until a single arm remains, which corresponds to the final recommended arm.

Constructor & Destructor Documentation

◆ SuccessiveRejectsPolicy()

| AIToolbox::Bandit::SuccessiveRejectsPolicy::SuccessiveRejectsPolicy | ( | const Experience & | experience, |

| unsigned | budget | ||

| ) |

Basic constructor.

- Parameters

-

experience The experience gathering pull data of the bandit. budget The overall pull budget for the exploration.

Member Function Documentation

◆ canRecommendAction()

| bool AIToolbox::Bandit::SuccessiveRejectsPolicy::canRecommendAction | ( | ) | const |

This function returns whether a single action remains in the pool.

◆ getActionProbability()

|

overridevirtual |

This function is fairly useless for SR, but it returns either 1.0 or 0.0 depending on which action is currently scheduled to be pulled.

◆ getCurrentNk()

| size_t AIToolbox::Bandit::SuccessiveRejectsPolicy::getCurrentNk | ( | ) | const |

This function returns the nK_ for the current phase.

◆ getCurrentPhase()

| size_t AIToolbox::Bandit::SuccessiveRejectsPolicy::getCurrentPhase | ( | ) | const |

This function returns the current phase.

Note that if the exploration process is ended, the current phase will be equal to the number of actions.

◆ getExperience()

| const Experience& AIToolbox::Bandit::SuccessiveRejectsPolicy::getExperience | ( | ) | const |

This function returns a reference to the underlying Experience we use.

- Returns

- The internal Experience reference.

◆ getPolicy()

|

overridevirtual |

This function probably should not be called, but otherwise is what you would expect given the current timestep.

Implements AIToolbox::Bandit::PolicyInterface.

◆ getPreviousNk()

| size_t AIToolbox::Bandit::SuccessiveRejectsPolicy::getPreviousNk | ( | ) | const |

This function returns the nK_ for the previous phase.

This is needed as the number of pulls for each arm in any given phase is equal to the new Nk minus the old Nk.

◆ recommendAction()

| size_t AIToolbox::Bandit::SuccessiveRejectsPolicy::recommendAction | ( | ) | const |

If the pool has a single element, this function returns the best estimated action after the SR exploration process.

◆ sampleAction()

|

overridevirtual |

This function selects the current action to explore.

Given how SR works, it simply recommends each arm (nKNew_ - nKOld_) times, before cycling to the next action.

- Returns

- The chosen action.

◆ stepUpdateQ()

| void AIToolbox::Bandit::SuccessiveRejectsPolicy::stepUpdateQ | ( | ) |

This function updates the current phase, nK_, and prunes actions from the pool.

This function must be called each timestep after the Experience has been updated.

If needed, it will trigger pulling the next action in sequence. If all actions have been pulled (nKNew_ - nKOld_) times, it will increase the current phase, update both nK values and perform the appropriate pruning using the current reward estimates contained in the underlying Experience.

The documentation for this class was generated from the following file:

- include/AIToolbox/Bandit/Policies/SuccessiveRejectsPolicy.hpp