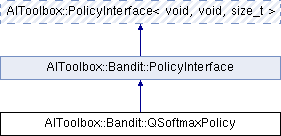

This class implements a softmax policy through a QFunction. More...

#include <AIToolbox/Bandit/Policies/QSoftmaxPolicy.hpp>

Public Member Functions | |

| QSoftmaxPolicy (const QFunction &q, double temperature=1.0) | |

| Basic constructor. More... | |

| virtual size_t | sampleAction () const override |

| This function chooses an action for state s with probability dependent on value. More... | |

| virtual double | getActionProbability (const size_t &a) const override |

| This function returns the probability of taking the specified action in the specified state. More... | |

| void | setTemperature (double t) |

| This function sets the temperature parameter. More... | |

| double | getTemperature () const |

| This function will return the currently set temperature parameter. More... | |

| virtual Vector | getPolicy () const override |

| This function returns a vector containing all probabilities of the policy. More... | |

Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, size_t > Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, size_t > | |

| PolicyInterface (void s, size_t a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| virtual size_t | sampleAction (const void &s) const=0 |

| This function chooses a random action for state s, following the policy distribution. More... | |

| virtual double | getActionProbability (const void &s, const size_t &a) const=0 |

| This function returns the probability of taking the specified action in the specified state. More... | |

| const void & | getS () const |

| This function returns the number of states of the world. More... | |

| const size_t & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Additional Inherited Members | |

Public Types inherited from AIToolbox::Bandit::PolicyInterface Public Types inherited from AIToolbox::Bandit::PolicyInterface | |

| using | Base = AIToolbox::PolicyInterface< void, void, size_t > |

Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, size_t > Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, size_t > | |

| void | S |

| size_t | A |

| RandomEngine | rand_ |

Detailed Description

This class implements a softmax policy through a QFunction.

A softmax policy is a policy that selects actions based on their expected reward: the more advantageous an action seems to be, the more probable its selection is. There are many ways to implement a softmax policy, this class implements selection using the most common method of sampling from a Boltzmann distribution.

As the epsilon-policy, this type of policy is useful to force the agent to explore an unknown model, in order to gain new information to refine it and thus gain more reward.

Constructor & Destructor Documentation

◆ QSoftmaxPolicy()

| AIToolbox::Bandit::QSoftmaxPolicy::QSoftmaxPolicy | ( | const QFunction & | q, |

| double | temperature = 1.0 |

||

| ) |

Basic constructor.

- Parameters

-

q The QFunction to act upon. temperature The temperature for the softmax equation.

Member Function Documentation

◆ getActionProbability()

|

overridevirtual |

This function returns the probability of taking the specified action in the specified state.

- See also

- sampleAction();

- Parameters

-

a The selected action.

- Returns

- The probability of taking the specified action in the specified state.

◆ getPolicy()

|

overridevirtual |

This function returns a vector containing all probabilities of the policy.

Ideally this function can be called only when there is a repeated need to access the same policy values in an efficient manner.

Implements AIToolbox::Bandit::PolicyInterface.

◆ getTemperature()

| double AIToolbox::Bandit::QSoftmaxPolicy::getTemperature | ( | ) | const |

This function will return the currently set temperature parameter.

- Returns

- The currently set temperature parameter.

◆ sampleAction()

|

overridevirtual |

This function chooses an action for state s with probability dependent on value.

This class implements softmax through the Boltzmann distribution. Thus an action will be chosen with probability:

\[ P(a) = \frac{e^{(Q(a)/t)})}{\sum_b{e^{(Q(b)/t)}}} \]

where t is the temperature. This value is not cached anywhere, so continuous sampling may not be extremely fast.

- Returns

- The chosen action.

◆ setTemperature()

| void AIToolbox::Bandit::QSoftmaxPolicy::setTemperature | ( | double | t | ) |

This function sets the temperature parameter.

The temperature parameter determines the amount of exploration this policy will enforce when selecting actions. Following the Boltzmann distribution, as the temperature approaches infinity all actions will become equally probable. On the opposite side, as the temperature approaches zero, action selection will become completely greedy.

The temperature parameter must be >= 0.0 otherwise the function will do throw std::invalid_argument.

- Parameters

-

t The new temperature parameter.

The documentation for this class was generated from the following file:

- include/AIToolbox/Bandit/Policies/QSoftmaxPolicy.hpp