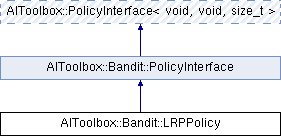

This class implements the Linear Reward Penalty algorithm. More...

#include <AIToolbox/Bandit/Policies/LRPPolicy.hpp>

Public Member Functions | |

| LRPPolicy (size_t A, double a, double b=0.0) | |

| Basic constructor. More... | |

| void | stepUpdateP (size_t a, bool result) |

| This function updates the LRP policy based on the result of the action. More... | |

| virtual size_t | sampleAction () const override |

| This function chooses an action, following the policy distribution. More... | |

| virtual double | getActionProbability (const size_t &a) const override |

| This function returns the probability of taking the specified action. More... | |

| void | setAParam (double a) |

| This function sets the a parameter. More... | |

| double | getAParam () const |

| This function will return the currently set a parameter. More... | |

| void | setBParam (double b) |

| This function sets the b parameter. More... | |

| double | getBParam () const |

| This function will return the currently set b parameter. More... | |

| virtual Vector | getPolicy () const override |

| This function returns a vector containing all probabilities of the policy. More... | |

Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, size_t > Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, size_t > | |

| PolicyInterface (void s, size_t a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| virtual size_t | sampleAction (const void &s) const=0 |

| This function chooses a random action for state s, following the policy distribution. More... | |

| virtual double | getActionProbability (const void &s, const size_t &a) const=0 |

| This function returns the probability of taking the specified action in the specified state. More... | |

| const void & | getS () const |

| This function returns the number of states of the world. More... | |

| const size_t & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Additional Inherited Members | |

Public Types inherited from AIToolbox::Bandit::PolicyInterface Public Types inherited from AIToolbox::Bandit::PolicyInterface | |

| using | Base = AIToolbox::PolicyInterface< void, void, size_t > |

Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, size_t > Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, size_t > | |

| void | S |

| size_t | A |

| RandomEngine | rand_ |

Detailed Description

This class implements the Linear Reward Penalty algorithm.

This algorithm performs direct policy updates depending on whether a given action was a success or a penalty.

In particular, the version called "Linear Reward-Inaction" (where the 'b' parameter is set to zero) is guaranteed to converge to optimal in a stationary environment.

Additionally, this algorithm can also be used in multi-agent settings, and will usually result in the convergence to some Nash equilibria.

The successful updates are in the form:

p(t + 1) = p(t) + a * (1 − p(t)) // For the action taken p(t + 1) = p(t) − a * p(t) // For all other actions

The failure updates are in the form:

p(t + 1) = (1 - b) * p(t) // For the action taken p(t + 1) = b / (|A| - 1) + (1 - b) * p(t) // For all other actions

Constructor & Destructor Documentation

◆ LRPPolicy()

| AIToolbox::Bandit::LRPPolicy::LRPPolicy | ( | size_t | A, |

| double | a, | ||

| double | b = 0.0 |

||

| ) |

Basic constructor.

These two parameters control learning. The 'a' parameter controls the learning when an action results in a success, while 'b' the learning during a failure.

Setting 'b' to zero results in an algorithm called "Linear Reward-Inaction", while setting 'a' == 'b' results in the "Linear Reward-Penalty" algorithm. Setting 'a' to zero results in the "Linear Inaction-Penalty" algorithm.

By default the policy is initialized with uniform distribution.

- Parameters

-

A The size of the action space. a The learning parameter on successful actions. b The learning parameter on failed actions.

Member Function Documentation

◆ getActionProbability()

|

overridevirtual |

This function returns the probability of taking the specified action.

- Parameters

-

a The selected action.

- Returns

- The probability of taking the selected action.

◆ getAParam()

| double AIToolbox::Bandit::LRPPolicy::getAParam | ( | ) | const |

This function will return the currently set a parameter.

- Returns

- The currently set a parameter.

◆ getBParam()

| double AIToolbox::Bandit::LRPPolicy::getBParam | ( | ) | const |

This function will return the currently set b parameter.

- Returns

- The currently set b parameter.

◆ getPolicy()

|

overridevirtual |

This function returns a vector containing all probabilities of the policy.

Ideally this function can be called only when there is a repeated need to access the same policy values in an efficient manner.

Implements AIToolbox::Bandit::PolicyInterface.

◆ sampleAction()

|

overridevirtual |

This function chooses an action, following the policy distribution.

- Returns

- The chosen action.

◆ setAParam()

| void AIToolbox::Bandit::LRPPolicy::setAParam | ( | double | a | ) |

This function sets the a parameter.

The a parameter determines the amount of learning on successful actions.

- Parameters

-

a The new a parameter.

◆ setBParam()

| void AIToolbox::Bandit::LRPPolicy::setBParam | ( | double | b | ) |

This function sets the b parameter.

The b parameter determines the amount of learning on losing actions.

- Parameters

-

b The new b parameter.

◆ stepUpdateP()

| void AIToolbox::Bandit::LRPPolicy::stepUpdateP | ( | size_t | a, |

| bool | result | ||

| ) |

This function updates the LRP policy based on the result of the action.

Note that LRP works with binary rewards: either the action worked or it didn't.

Environments where rewards are in R can be simulated: scale all rewards to the [0,1] range, and stochastically obtain a success with a probability equal to the reward. The result is equivalent to the original reward function.

- Parameters

-

a The action taken. result Whether the action taken was a success, or not.

The documentation for this class was generated from the following file:

- include/AIToolbox/Bandit/Policies/LRPPolicy.hpp