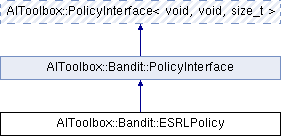

This class implements the Exploring Selfish Reinforcement Learning algorithm. More...

#include <AIToolbox/Bandit/Policies/ESRLPolicy.hpp>

Public Member Functions | |

| ESRLPolicy (size_t A, double a, unsigned timesteps, unsigned explorationPhases, unsigned window) | |

| Basic constructor. More... | |

| void | stepUpdateP (size_t a, bool result) |

| This function updates the ESRL policy based on the result of the action. More... | |

| bool | isExploiting () const |

| This function returns whether ESRL is now in the exploiting phase. More... | |

| virtual size_t | sampleAction () const override |

| This function chooses an action, following the policy distribution. More... | |

| virtual double | getActionProbability (const size_t &a) const override |

| This function returns the probability of taking the specified action. More... | |

| void | setAParam (double a) |

| This function sets the a parameter. More... | |

| double | getAParam () const |

| This function will return the currently set a parameter. More... | |

| void | setTimesteps (unsigned t) |

| This function sets the required number of timesteps per exploration phase. More... | |

| unsigned | getTimesteps () const |

| This function returns the currently set number of timesteps per exploration phase. More... | |

| void | setExplorationPhases (unsigned p) |

| This function sets the required number of exploration phases before exploitation. More... | |

| unsigned | getExplorationPhases () const |

| This function returns the currently set number of exploration phases before exploitation. More... | |

| void | setWindowSize (unsigned window) |

| This function sets the size of the timestep window to compute the value of the action that ESRL is converging to. More... | |

| unsigned | getWindowSize () const |

| This function returns the currently set size of the timestep window to compute the value of an action. More... | |

| virtual Vector | getPolicy () const override |

| This function returns a vector containing all probabilities of the policy. More... | |

Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, size_t > Public Member Functions inherited from AIToolbox::PolicyInterface< void, void, size_t > | |

| PolicyInterface (void s, size_t a) | |

| Basic constructor. More... | |

| virtual | ~PolicyInterface () |

| Basic virtual destructor. More... | |

| virtual size_t | sampleAction (const void &s) const=0 |

| This function chooses a random action for state s, following the policy distribution. More... | |

| virtual double | getActionProbability (const void &s, const size_t &a) const=0 |

| This function returns the probability of taking the specified action in the specified state. More... | |

| const void & | getS () const |

| This function returns the number of states of the world. More... | |

| const size_t & | getA () const |

| This function returns the number of available actions to the agent. More... | |

Additional Inherited Members | |

Public Types inherited from AIToolbox::Bandit::PolicyInterface Public Types inherited from AIToolbox::Bandit::PolicyInterface | |

| using | Base = AIToolbox::PolicyInterface< void, void, size_t > |

Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, size_t > Protected Attributes inherited from AIToolbox::PolicyInterface< void, void, size_t > | |

| void | S |

| size_t | A |

| RandomEngine | rand_ |

Detailed Description

This class implements the Exploring Selfish Reinforcement Learning algorithm.

This is a learning algorithm for common interest games. It tries to consider both Nash equilibria and Pareto-optimal solution in order to maximize the payoffs to the agents.

The original algorithm can be modified in order to work with non-cooperative games, but here we implement only the most general version for cooperative games.

An important point for this algorithm is that each agent only considers its own payoffs, and in the cooperative case does not need to communicate with the other agents.

The idea is to repeatedly use the Linear Reward-Inaction algorithm to converge and find a Nash equilibrium in the space of action, and then cut that one from the action space and repeat the procedure. This would recursively find out all Nash equilibra.

This whole process is then repeated multiple times to ensure that most of the equilibria have been explored.

During each exploration step, a rolling average is maintained in order to estimate the value of the action the LRI algorithm converged to.

After all exploration phases have been done, the best action seen is chosen and repeated forever during the final exploitation phase.

Constructor & Destructor Documentation

◆ ESRLPolicy()

| AIToolbox::Bandit::ESRLPolicy::ESRLPolicy | ( | size_t | A, |

| double | a, | ||

| unsigned | timesteps, | ||

| unsigned | explorationPhases, | ||

| unsigned | window | ||

| ) |

Basic constructor.

- Parameters

-

A The size of the action space. a The learning parameter for Linear Reward-Inaction. timesteps The number of timesteps per exploration phase. explorationPhases The number of exploration phases before exploitation. window The last number of timesteps to consider to obtain the learned action value during a single exploration phase.

Member Function Documentation

◆ getActionProbability()

|

overridevirtual |

This function returns the probability of taking the specified action.

- Parameters

-

a The selected action.

- Returns

- The probability of taking the selected action.

◆ getAParam()

| double AIToolbox::Bandit::ESRLPolicy::getAParam | ( | ) | const |

This function will return the currently set a parameter.

- Returns

- The currently set a parameter.

◆ getExplorationPhases()

| unsigned AIToolbox::Bandit::ESRLPolicy::getExplorationPhases | ( | ) | const |

This function returns the currently set number of exploration phases before exploitation.

- Returns

- The currently set number of exploration phases.

◆ getPolicy()

|

overridevirtual |

This function returns a vector containing all probabilities of the policy.

Ideally this function can be called only when there is a repeated need to access the same policy values in an efficient manner.

Implements AIToolbox::Bandit::PolicyInterface.

◆ getTimesteps()

| unsigned AIToolbox::Bandit::ESRLPolicy::getTimesteps | ( | ) | const |

This function returns the currently set number of timesteps per exploration phase.

- Returns

- The currently set number of timesteps.

◆ getWindowSize()

| unsigned AIToolbox::Bandit::ESRLPolicy::getWindowSize | ( | ) | const |

This function returns the currently set size of the timestep window to compute the value of an action.

- Returns

- The currently set window size.

◆ isExploiting()

| bool AIToolbox::Bandit::ESRLPolicy::isExploiting | ( | ) | const |

This function returns whether ESRL is now in the exploiting phase.

This method returns whether ESRLPolicy has finished learning. Once in the exploiting phase, the method won't learn anymore, and will simply exploit the knowledge gained.

Thus, if this method returns true, it won't be necessary anymore to call the stepUpdateP method (although it won't have any effect to do so).

- Returns

- Whether ESRLPolicy is in the exploiting phase.

◆ sampleAction()

|

overridevirtual |

This function chooses an action, following the policy distribution.

- Returns

- The chosen action.

◆ setAParam()

| void AIToolbox::Bandit::ESRLPolicy::setAParam | ( | double | a | ) |

This function sets the a parameter.

The a parameter determines the amount of learning on successful actions.

- Parameters

-

a The new a parameter.

◆ setExplorationPhases()

| void AIToolbox::Bandit::ESRLPolicy::setExplorationPhases | ( | unsigned | p | ) |

This function sets the required number of exploration phases before exploitation.

- Parameters

-

p The new number of exploration phases.

◆ setTimesteps()

| void AIToolbox::Bandit::ESRLPolicy::setTimesteps | ( | unsigned | t | ) |

This function sets the required number of timesteps per exploration phase.

- Parameters

-

t The new number of timesteps.

◆ setWindowSize()

| void AIToolbox::Bandit::ESRLPolicy::setWindowSize | ( | unsigned | window | ) |

This function sets the size of the timestep window to compute the value of the action that ESRL is converging to.

- Parameters

-

window The new size of the average window.

◆ stepUpdateP()

| void AIToolbox::Bandit::ESRLPolicy::stepUpdateP | ( | size_t | a, |

| bool | result | ||

| ) |

This function updates the ESRL policy based on the result of the action.

Note that ESRL works with binary rewards: either the action worked or it didn't.

Environments where rewards are in R can be simulated: scale all rewards to the [0,1] range, and stochastically obtain a success with a probability equal to the reward. The result is equivalent to the original reward function.

This function both updates the internal LRI algorithm, and checks whether a new exploration phase is warranted.

- Parameters

-

a The action taken. result Whether the action taken was a success, or not.

The documentation for this class was generated from the following file:

- include/AIToolbox/Bandit/Policies/ESRLPolicy.hpp