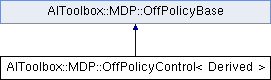

This class is a general version of off-policy control. More...

#include <AIToolbox/MDP/Algorithms/Utils/OffPolicyTemplate.hpp>

Public Types | |

| using | Parent = OffPolicyBase |

Public Types inherited from AIToolbox::MDP::OffPolicyBase Public Types inherited from AIToolbox::MDP::OffPolicyBase | |

| using | Trace = std::tuple< size_t, size_t, double > |

| using | Traces = std::vector< Trace > |

Public Member Functions | |

| OffPolicyControl (size_t s, size_t a, double discount=1.0, double alpha=0.1, double tolerance=0.001, double epsilon=0.1) | |

| Basic constructor. More... | |

| void | stepUpdateQ (const size_t s, const size_t a, const size_t s1, const double rew) |

| This function updates the internal QFunction using the discount set during construction. More... | |

| void | setEpsilon (double e) |

| This function sets the epsilon parameter. More... | |

| double | getEpsilon () const |

| This function will return the currently set epsilon parameter. More... | |

Public Member Functions inherited from AIToolbox::MDP::OffPolicyBase Public Member Functions inherited from AIToolbox::MDP::OffPolicyBase | |

| OffPolicyBase (size_t s, size_t a, double discount=1.0, double alpha=0.1, double tolerance=0.001) | |

| Basic construtor. More... | |

| void | setLearningRate (double a) |

| This function sets the learning rate parameter. More... | |

| double | getLearningRate () const |

| This function will return the current set learning rate parameter. More... | |

| void | setDiscount (double d) |

| This function sets the new discount parameter. More... | |

| double | getDiscount () const |

| This function returns the currently set discount parameter. More... | |

| void | setTolerance (double t) |

| This function sets the trace cutoff parameter. More... | |

| double | getTolerance () const |

| This function returns the currently set trace cutoff parameter. More... | |

| void | clearTraces () |

| This function clears the already set traces. More... | |

| const Traces & | getTraces () const |

| This function returns the currently set traces. More... | |

| void | setTraces (const Traces &t) |

| This function sets the currently set traces. More... | |

| size_t | getS () const |

| This function returns the number of states on which QLearning is working. More... | |

| size_t | getA () const |

| This function returns the number of actions on which QLearning is working. More... | |

| const QFunction & | getQFunction () const |

| This function returns a reference to the internal QFunction. More... | |

| void | setQFunction (const QFunction &qfun) |

| This function allows to directly set the internal QFunction. More... | |

Protected Attributes | |

| double | epsilon_ |

Protected Attributes inherited from AIToolbox::MDP::OffPolicyBase Protected Attributes inherited from AIToolbox::MDP::OffPolicyBase | |

| size_t | S |

| size_t | A |

| double | discount_ |

| double | alpha_ |

| double | tolerance_ |

| QFunction | q_ |

| Traces | traces_ |

Additional Inherited Members | |

Protected Member Functions inherited from AIToolbox::MDP::OffPolicyBase Protected Member Functions inherited from AIToolbox::MDP::OffPolicyBase | |

| void | updateTraces (size_t s, size_t a, double error, double traceDiscount) |

| This function updates the traces using the input data. More... | |

Detailed Description

template<typename Derived>

class AIToolbox::MDP::OffPolicyControl< Derived >

This class is a general version of off-policy control.

This class is used to compute the optimal QFunction, when you are actually acting and gathering data following another policy (which is why it's called off-policy). This is what QLearning does, for example.

As in the off-policy evaluation case, this method does not work well with a deterministic behaviour. Even worse, we're trying to find out the optimal policy, which is greedy by definition. Thus, this method assumes that the target is an epsilon greedy policy, and needs to know its epsilon. You should, over time, decrease the epsilon and this method should converge to the optimal QFunction.

Note that this class does not necessarily encompass all off-policy control methods. It only does for the one that use eligibility traces in a certain form, such as ImportanceSampling, RetraceLambda, etc.

This class is supposed to be used as a CRTP parent. The child must derive it as:

In addition, the child must define the function

Where maxA is the already computed best greedy action for state s.

This will then be automatically called here to compute the amount to decrease the traces during the stepUpdateQ. For example, in ImportanceSampling the function would return:

Note how this is different from the OffPolicyEvaluation case, as we assume the target policy to be epsilon greedy.

Member Typedef Documentation

◆ Parent

| using AIToolbox::MDP::OffPolicyControl< Derived >::Parent = OffPolicyBase |

Constructor & Destructor Documentation

◆ OffPolicyControl()

| AIToolbox::MDP::OffPolicyControl< Derived >::OffPolicyControl | ( | size_t | s, |

| size_t | a, | ||

| double | discount = 1.0, |

||

| double | alpha = 0.1, |

||

| double | tolerance = 0.001, |

||

| double | epsilon = 0.1 |

||

| ) |

Basic constructor.

- Parameters

-

s The size of the state space. a The size of the action space. discount The discount of the environment. alpha The learning rate parameter. tolerance The trace cutoff parameter. epsilon The epsilon of the implied target greedy epsilon policy.

Member Function Documentation

◆ getEpsilon()

| double AIToolbox::MDP::OffPolicyControl< Derived >::getEpsilon |

This function will return the currently set epsilon parameter.

- Returns

- The currently set epsilon parameter.

◆ setEpsilon()

| void AIToolbox::MDP::OffPolicyControl< Derived >::setEpsilon | ( | double | e | ) |

This function sets the epsilon parameter.

The epsilon parameter determines the amount of epsilon this policy will enforce when selecting actions. In particular actions are going to selected randomly with probability (1-epsilon), and are going to be selected following the underlying policy with probability epsilon.

The epsilon parameter must be >= 0.0 and <= 1.0, otherwise the function will do throw std::invalid_argument.

- Parameters

-

e The new epsilon parameter.

◆ stepUpdateQ()

| void AIToolbox::MDP::OffPolicyControl< Derived >::stepUpdateQ | ( | const size_t | s, |

| const size_t | a, | ||

| const size_t | s1, | ||

| const double | rew | ||

| ) |

This function updates the internal QFunction using the discount set during construction.

This function takes a single experience point and uses it to update the QFunction. This is a very efficient method to keep the QFunction up to date with the latest experience.

- Parameters

-

s The previous state. a The action performed. s1 The new state. rew The reward obtained.

Member Data Documentation

◆ epsilon_

|

protected |

The documentation for this class was generated from the following file:

- include/AIToolbox/MDP/Algorithms/Utils/OffPolicyTemplate.hpp